Characterizing LLM Abstention Behavior in Science QA with Context Perturbations

Oct 1, 2024· ,,·

1 min read

,,·

1 min read

Bingbing Wen

Bill Howe

Lucy Lu Wang

Abstract

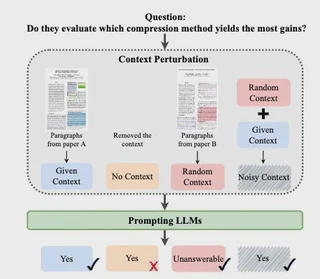

We characterize how large language models abstain from answering science questions when presented with context perturbations, providing insights into model uncertainty and reliability.

Type

Publication

EMNLP 2024 Findings

We characterize how large language models abstain from answering science questions when presented with context perturbations, providing insights into model uncertainty and reliability in scientific reasoning tasks.